Numerical computation of derivative

Hi, my dear readers, it's me again, reincarnated from the ashes of time and space. For this time I want to tell you about one thing, which could be quite useful - numeric calculation of derivative. Yes, it is quite basic stuff for people who are involved in this, but I'm sure there are a lot of ones who don't know this. And mind - this isn't a plain article for beginners, because at the end I'll show you one very interesting thing, which I found yesterday in a textbook and therefore decided to write this article.

1. Preface

Let's remember what a derivative is.

Definition

Function f'(x) is called the derivative of function f(x) if and only if

f'(x) = lim f(x+h)-f(x)

h->0 -----------

h

The expression lim(x->a) f(x) means that this is limit of the following expression. It shows us which value the function f(x) will have if we will let x come closer and closer and closer to a.

Of course, sometimes we can't find this value, for example consider the function:

f(x) = { 1, if x<1

{ 0, if x>=1

In the point x->1 our function won't have any limit, because in this case the notion of limit dessipates on two things - left limit and right limit. Left limit is 1, right - 0. While in order to function have limit in point a, its left and right limits should be equal.

So, you see, a derivative doesn't have to exist at an arbitrary point.

The derivative could be imagined as the velocity of the function f(x) and the second derivative (derivative from the first derivative) could be imagined as its acceleration.

So, we have a task - how to compute a derivative, if all we know is the table of values of f(x), not its analytical expression, and all we have is a computer?

2. The answer

The answer is primitive - look at the definition of a derivative. It's a limit. We said that limit shows us which value function will have when we come closer and closer to specified point. So, if in that expression we will take h very small, then by using this fraction:

f(x+h)-f(x)

-----------

h

we will get derivative in this point.

Of course, the smaller h is, the better our approximation comes to f'(x).

So, basically, that's it.

As long as it doesn't matter for the cpu to divide your number by 0.1 or by 0.0000000000001 (I do hope), it would be better to keep the h as small as possible for your favourite float format.

Note, that you should check, if the f(x+h)-f(x) is too big, because when f(x+h)-f(x) will become reasonably high, the expression will turn to infinity, and your cpu can't work with it yet. ;)

You should compute this constant by yourself, because you need concrete value for h and concrete data type. How - is written here:

Let's suppose that your favourite float type could contain K as the biggest number. You have specified precision h.

Suppose that M = sup[ f(x+h)-f(x) ]. "Sup" means supremum, maximal value of the function on a given range. Then M/h should be less than K in order to be stored in memory.

M

--- < K

h

We know, that h>0. Therefore (and only therefore)

M < K*h (*)

This means that your function shouldn't make oscillations bigger than this number M, or you wouldn't be able to compute derivative from it.

How to fight against this? Answer: find a reasonable compromise between precision and amount of oscillations. You see, the bigger h is, the bigger oscillations your function could make, because M will be bigger, look at (*).

And now remember another thing - the cpu has a limit on floating numbers not only in the upper range, but also in lower range, i.e. there's also a limit on storing extremely low values.

So, you should also check if f(x+h)-f(x) is too small for the cpu. Do it analogically as in the previous example. I.e., find the constant m = inf [f(x+h)-f(x)]. ("inf" means infinitum - minimal value of a function on a given range).

Now let's discuss another problem - how to store our function.

f(x) could be set both by table or by some function of the programming language, which computes it from a given x.

In the second case you could easily compute f(x+h) - I suppose you understand how.

In the first case - you could act by two ways - one way is to compute Lagrange's interpolation polynom (see my other article in this Hugi) and then compute f(x+h) from it (this method is good when you need perfect precision) and another one is simply to take h as the distance between two adjacent values in array, so f(x+h) will be the next element in your array, and f(x) - the current element.

What does the phrase "to take h as the distance between two adjacent values in an array" mean?

Values in an array don't have a distance between each other (except distances in memory - but we don't care about this)!

It's right, but think - from where do you get this array? Most probably you get it from the real life, and most probably this is the result of some measures of some parameters, i.e. these are numbers, taken from some gear by some time distances. Most probably these time distances are equal.

What do I mean, concretely? Imagine digital audio. Your sound card takes a number from its ADC with the frequency F Hz (F times in a second, F could be, for example, 44100, 22050 etc) and stores them in memory. You get an array. So, now you could say, that the time distance between your numbers in array is 1/F. So, when you sample sound on 44100 Hz, time distances between individual samples are equal to 0.000022675 sec.

So, let's take such parameter for h (of course, in your concrete example the way of determining of "distance" could be completely abstract, and of course in your case you won't get 0.000022675, most probably).

Now you are able to compute f(x+h) and f(x) - first is the next value in your array (because all numbers are taken with the distance of h in our case), and second is current value in your array.

So, now you are able to compute derivative from function, set by array.

Remember the Cyan Phase M-Derive effect in Buzz? I suppose that all it does is shown above. The effect is quite shitty, though. But it doesn't mean the derivative is unusable.

Remember my article from Hugi 24 about distortion? There we needed a derivative for the Newton method, so it is computed the way shown above.

Now we come to more advanced things.

3. Quality check

It's bad just to use the formula without understanding its properties. We should find out how closely it approximates f'(x). Let's do it. For this we will need to have a notion of the so-called "big o", or O. Let's introduce it.

Definition

We have functions f(x) and g(x). If there is a number c>0 and the following is accomplished: |f(x)| <= c*|g(x)| in some range of point x0, then f(x) is called as limited function as compared with g(x). Or it could be written as f(x) = O(g(x)). For example, f(x) = x^2, and g(x) = x^2 + 3x + 5. It's obvious that we could find such a number that f(x) will become O(g(x)), because f(x) is the so-called main part of function g(x).

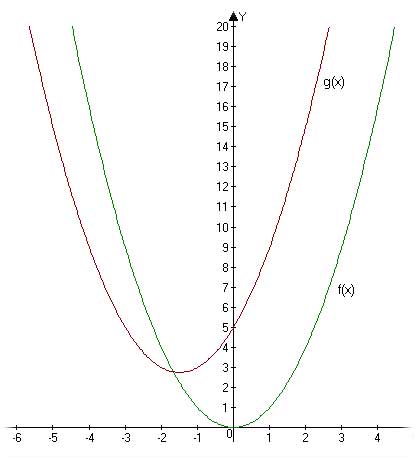

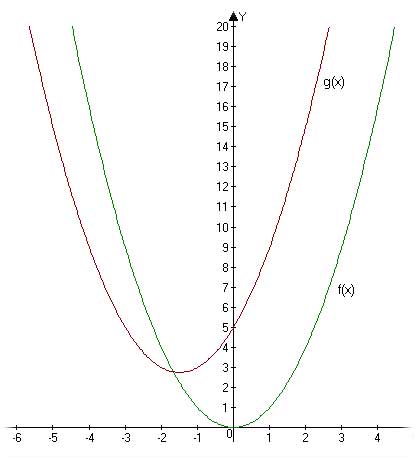

Look at this picture:

What will happen if we move g(x) up (i.e. we multiply it with some constant c), while keeping f(x) at its place? Right, we will find such a constant that c*g(x) will be upper than f(x) for all points.

So, now you know what O(f(x)) is. By the way, complexities of algorithms are written exactly in this form, for example the complexity of quick sort is O(log2(n)), if I remember right, where n is the quantity of elements in an array. It means that the complexity could be both log2(n)+578987x+5728937492 or 100*log2(n)+293742x+234872938, the most important fact is that the main part of such an expression will be log2(n).

Now the other fact. You should be familiar with the Taylor theorem for further reading. In previous Hugi Adok showed this in his article about approximation of functions. Be sure to check it out (hey, Claus, could you prove this theorem? - I did a year ago on exam :) or find it in any text book on math analysis.

Let's find, how close the expression

f(x+h)-f(x)

-----------

h

comes to f'(x).

Let's suppose that f(x) has a limited second derivative in the neighbourhood of x. The canonical way of Taylor's formula:

f(x) = f(x0) + f'(x0)(x-x0)^1 + f''(x0)(x-x0)^2 + ...

------ -------

1! 2!

Let x in this expression be equal to (x+h), and x0 - to x.

Then

f(x+h) = f(x) + f'(x)*h + h^2*f''(x) + o(f''(x))

---

2

- the last member is a small remainder, forget about it.

From here flows, that

f(x+h)-f(x) f'(x) + h * f''(x)

----------- = ---

h 2

or

f(x+h)-f(x) f'(x) + O(h) (1)

----------- =

h

- now you understand why.

Remember this fact. We see that this formula gives us f'(x) AND a small remainder, which is limited as compared with h.

Now let's think. If the function is differentiable in point x, then the following is also true:

f'(x) = lim f(x+h)-f(x-h)

h->0 -------------

2h

Just why not - we simply take 2h instead of h. It's equal.

AND NOW LISTEN TO ME. THIS EXPRESSION GIVES A MUCH CLOSER APPROXIMATION TO THE ACTUAL DERIVATIVE THAN PREVIOUS, WHICH WAS GIVEN EARLIER.

Let's prove this.

Let's suppose that f(x) has a limited third derivative in the neighbourhood of point x.

Then, from Taylor's theorem, with the same substitutions as earlier,

f(x+h) = f(x) + f'(x)*h + 1 f''(x)*h^2 + 1 f'''(x)*h^3

--- ---

2 6

f(x-h) = f(x) - f'(x)*h + 1 f''(x)*h^2 - 1 f'''(x)*h^3

--- ---

2 6

Now let's subtract the second expression from the first and then divide it by 2h.

f(x+h) - f(x-h)

--------------- = f'(x) + 1 [ f'''(x) + f'''(x) ] * h^2 =

2h ---

6

= f'(x) + O(h^2)

- you understand why.

So,

f(x+h) - f(x-h)

--------------- = f'(x) + O(h^2) (2)

2h

And this says all. Look at the difference between (1) and (2)! O(h^2) is less than O(h) because h is small, therefore a fractional number, which is close to zero. When we find h^2 from h when h lies in (0;1), the result, h^2, is smaller than h! E.g. 0.9^2 = 0.81 etc.

So, the second formula is better than the first formula, BY THE ORDER OF MAGNITUDE. This means that in the first case we add O(h), a big number, and in the second case - O(h^2), a much smaller number, where h is close to zero.

Oh! Just who could even think! So, always use (2) instead of (1)!!!!

This was the fact about which I told you at the beginning. You see, it is worth the reading. I found it in the book, which you could find in the attached lists of used literature. You see, sometimes it's useful to read books.

I can only add, that with this formulae you could compute derivatives of parametric functions also, suppose we have x(t) and y(t), then

y'(x) = y'(t)

-----

x'(t)

Just use two separate derivatives.

4. Conclusion

So, you have seen, what the methods of math are. You have seen that it isn't enough just to put some delirium down on your paper and then say that you are genious mathematician. Math has another ways, another philosophy. Everything should be deduced, compared, and only then used. You see, just a little "quality check" of an approximation have lead us to more effective formula! That's basically all.

Will be happy to answer your question and to receive your feedback.

Also I can tell you about numerical integrals computation in the next Hugi - mail me if you have such a need.

iliks or

Ilya Palopezhencev

email: iliks@mail.ru or iliks@chat.ru

Used Literature:

1. Kudryavcev L. D. Math analysis course. Moscow, 1988; volume III, pp. 326 - 328